AI Large Lanuage Models: Still a BS Firehose

Tagged:ArtificialIntelligence

/

CatBlogging

/

CorporateLifeAndItsDiscontents

/

JournalClub

/

NotableAndQuotable

/

Sadness

/

SomebodyAskedMe

Somebody asked me, in the course of a family video call, what I thought about the latest AI Large Language Models (LLMs) and how they’re being used for everything. Have they improved from their previous BS conditions? Ahem. Uh… no.

The Weekend Prior

[This post is slightly delayed, by brain fog and by life in general. So it’s being posted about 9 days after I had intended. Hence a post date of 2024-Jun-22, but some references below which are later.]

We’ve previously expressed some… acerbic opinions about LLMs and their various forms of misbehavior, obfuscation, and generally highly persuasive BS.

For example, their inexhaustible BS fountains of persuasive misdirection [1]:

What do you call a person who is very good at sounding persuasive and plausible, but absolutely bereft of fidelity to fact? A BS artist.

…

When I asked it a technical question, it made a very plausible-sounding argument, complete with citations to the relevant scientific literature. I was really impressed: the papers it cited were by famous scientists working in the correct area, published in important journals, with titles that were spot-on relevant to my interests. So why hadn’t I, as a scientist familiar with the area, already read those papers? Because they were all fake! Every single one was an hallucination, absolutely bereft of existence.

Just in case that’s not enough, later in that post we note a reporter’s experience: a Microsoft AI threatened to break up his marriage and murder him! Were this an actual person, that would have been an actual crime.

The name “ChatGPT” itself, of course, is a rather bizarre name, especially in French. [2] “Chat, j’ai pété” is mildly transgressive in French, but borderline comically scatological in English.

Of course people abuse it. There’s more work to be done in fact-checking every single word than there is in writing yourself in the first place. That hasn’t stopped people from being naïvely charmed by the persuasive powers of LLMs:

- Academics reviewing articles by their colleagues for publication in journals have occasionally skimped on the task, trying to sneak in an AI review. [3] The results would, of course, be hilarious if they were not real.

- Even more disgusting, entire papers have been submitted to journals that bear the stigmata of having been AI-generated [4]. They are, of course, nonsense, but some have nonetheless sneaked past peer review.

With that as a Bayesian prior, do we have evidence sufficient to change our minds?

Better Now? Nope, Still BS

Bias optimization, and how to react to structural lying

It seems every tech company wants to force AI down your throat, putting it into products

whether you want it or not. For example, Google searches now place an AI guess at your

search at the front of a query (unless you append ‘&udm=14’ as a

de-mumble-ification cheat code – to Bowdlerize the term due to Cory Doctorow).

It seems every tech company wants to force AI down your throat, putting it into products

whether you want it or not. For example, Google searches now place an AI guess at your

search at the front of a query (unless you append ‘&udm=14’ as a

de-mumble-ification cheat code – to Bowdlerize the term due to Cory Doctorow).

Apple, alas, is no different: they announced a suite of AI stuff at WWDC, despite the problems inherent in that. [5] The lying is probably an intrinsic property of LLMs, but they are determined to deploy it anyway:

… Apple CEO Tim Cook admitted outright that he’s not entirely sure his tech empire’s latest “Apple Intelligence” won’t come up with lies and confidently distort the truth, a problematic and likely intrinsic tendency that has plagued pretty much all AI chatbots released to date.

Pants on Fire

It’s an uncomfortable reality, especially considering just how laser-focused the tech industry and Wall Street have been on developing AI chatbots. Despite tens of billions of dollars being poured into the tech, AI tools are repeatedly being caught coming up with obvious falsehoods and — perhaps more worryingly — convincingly told lies.

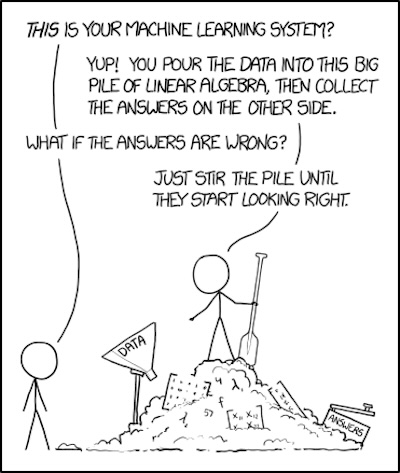

In other words, matters are as presaged by this as-usual-prophetic

XKCD #1838. “Just stir the pile until the answers start looking

right” is nothing near a strategy for truth-telling!

In other words, matters are as presaged by this as-usual-prophetic

XKCD #1838. “Just stir the pile until the answers start looking

right” is nothing near a strategy for truth-telling!

Do you just pile up steel beams to make a bridge, and rearrange them until cars no longer fall off? Of course not! You try to know what you are doing, in the civil engineering sense and the social impact sense, before you start building.

Nothing less than that will do here.

Also, we should make sure our AIs are, to use a technical term, “aligned” with human values. Otherwise, once we make an unaligned Artificial Super Intelligence (ASI), humanity dies shortly thereafter.

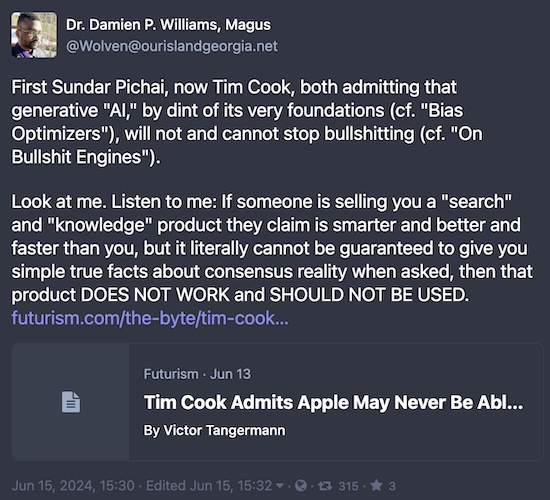

Summary from a philosophy professor of UNC Charlotte, specializing in science, technology, and society,

reacting to the same information:

The bit where he says, “Look at me. Listen to me:” is important. Any source that is

highly persuasive, but not committed to fact in anyway, DOES NOT WORK and SHOULD NOT BE

USED. Excellent advice, that.

The bit where he says, “Look at me. Listen to me:” is important. Any source that is

highly persuasive, but not committed to fact in anyway, DOES NOT WORK and SHOULD NOT BE

USED. Excellent advice, that.

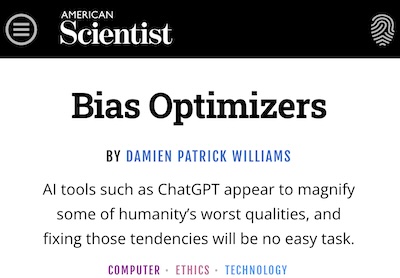

In case you are not persuaded by social media (good for you!), Prof. Williams has provided both a video of a seminar, shown here, evocatively titled “On Bullsh*t Engines”, and a more formally peer reviewed article published in American Scientist. [6]

A pointed example from the video (4:03), on AI-generated guides to foraging for edible mushrooms, available on Amazon despite its many errors:

Now, when it comes to misidentifying plants out in the wild, you can have a bad time in a number of ways. One of those ways is, you know, you misidentify something as safe when it’s actually poisonous, you get a rash. But when it comes to things like mushroom guides and foraging for food one of the ways that that can go wrong is, uh… you die.

As writer Terry Pratchett is alleged to have said, “All mushrooms are edible, but some only once.” Idiotic AI systems like this will unerringly guide you to that one last mushroom.

He has lots of other examples, some showing horrifying mistakes because the AI was trained on rather raw Internet texts full of prejudice, racism, sexism, and fascism. Why in the world would you want to listen to that?

At 38:55 in the video:

When we uncritically make use of these tools what we are doing is we are muddying the process of generating knowledge together. We are embodying and empowering a system which does not in any way shape or form care about what is true or what is factual, does not care about the impacts …

…

They do not care about truth. They do not care about fact. They are in fact bullsh*t engines.

Liability Issues

It’s bad enough that they just lie with impunity: bullsh*t doesn’t so much oppose truth,

as it totally ignores truth as being irrelevant. It’s so bad, people are exposed to

lethal risks, as shown in the mushroom example above.

It’s bad enough that they just lie with impunity: bullsh*t doesn’t so much oppose truth,

as it totally ignores truth as being irrelevant. It’s so bad, people are exposed to

lethal risks, as shown in the mushroom example above.

But, in accordance with the 3rd Rule of Crime by writer John Rogers:

Rule 3: Nothing ever stops until a Rich White Guy goes to jail.

… it now appears Google’s lawyers are becoming – slowly and dimly – aware that their main product is turning into a liability machine. This is both through generating libel [7], and, of course, the mushrooms [8] (which seems to be everybody’s favorite example now).

The current case appears to be an implication by Google that a high-level chess player admitted to “using an engine”, i.e., cheating. He admitted no such thing, and apparently no such thing happened. The first liability suit was for $100 million; though it failed on procedural grounds the victim will without a doubt take a second shot. The allegation of cheating happened repeatedly, until the Atlantic reporter called them for a comment, at which point it was quickly hidden in typical craven corporate fashion.

Google’s AI has also asserted that one should eat a small rock every day, and that Barak Obama is Muslim. This is what happens when you train on the public Internet texts!

Microsoft’s Bing has a similar history of libel: it asserted that veteran aviation consultant Jeffrey Battle had pled guilty to seditious conspiracy against the United States, when in fact it was an entirely distinct person with a similar name. A person would know to check the facts before saying something like that; AIs currently have no commitment to the truth in that regard. There is, of course, a lawsuit in progress.

These are dangerous, stupid systems, and their deployment seemingly everywhere is going to be a bonanza for liability lawyers. For the companies deploying them, not so much.

The FTC has already issued warnings. Whether corporate management will hear them, above the siren song of huge-but-fictitious profits, is another matter.

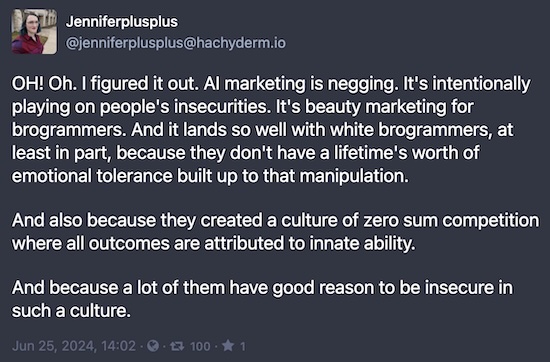

That siren song was recently put sharply into perspective for me:

‘Negging’ is a particularly odious tactic used by unscrupulous sorts to seduce sexual partners by preying upon their insecurities (pointing out “negatives”). Similarly here, AI marketing is negging for the ‘brogrammers’ who view themselves as cutting edge, don’t want to be left behind, and have no history with this kind of manipulation to help them resist it.

Disturbing, but it seems apt.

The Rising Rage Against the Machine

People who make things, who actually have to do things, seem to have caught on. Their

management, as seems to be always the case, has not:

In my employment years, I watched almost that exact scenario play out multiple times. A manager who is possessed of only half a clue about some new-ish method or technology tries to force it everywhere. The results are uniformly disastrous, and eventually get blamed on “Expert A” above, who was screaming “No!” all the time.

A colorful case in point is a rant [9] that got considerable

circulation in the more math/sci/tech corners of social media a couple weeks ago. Like

many of my generation, I generally avoid profanity. However, even I admit that one must

have something in reserve for special cases, and this is indeed a… special case.

A colorful case in point is a rant [9] that got considerable

circulation in the more math/sci/tech corners of social media a couple weeks ago. Like

many of my generation, I generally avoid profanity. However, even I admit that one must

have something in reserve for special cases, and this is indeed a… special case.

It expresses – eloquently, ribaldly, and scatologically – the absolute rage people with technical and scientific training have for the latest dumb management positions on AI. It is also hilarious. (Never neglect humor: it may be your sharpest rhetorical tool!)

Almost all the current AI hype is from grifters, and the summary should be:

We have a few key things that a grifter does not have, such as job stability, genuine friendships, and souls.

…

This entire class of person is, to put it simply, abhorrent to right-thinking people. They’re an embarrassment to people that are actually making advances in the field, a disgrace to people that know how to sensibly use technology to improve the world, and are also a bunch of tedious know-nothing bastards that should be thrown into Thought Leader Jail until they’ve learned their lesson…

I spent decades in applied statistics and machine learning. And yes, that’s how we generally see the managers yattering on subjects about which they know nothing.

Now, for those of you whose taste runs to somewhat less profanity and somewhat more peer

review, here is a peer-reviewed article saying largely the same

thing. [10] But with less profanity. And, alas, less

humor.

Now, for those of you whose taste runs to somewhat less profanity and somewhat more peer

review, here is a peer-reviewed article saying largely the same

thing. [10] But with less profanity. And, alas, less

humor.

They did manage to get the word ‘bullsh*t’ past the editors, so kudos for that. And… who knew? There turns out to be a whole academic classification scheme for the various forms and manifestations of bovine ordure. As they point out, St Augustine distinguished 7 types of lies that go against truth, so it should not be surprising to find various ways of ignoring the truth.

But their conclusion is simply, ChatGPT is bullsh*t:

Calling their mistakes ‘hallucinations’ isn’t harmless: it lends itself to the confusion that the machines are in some way misperceiving but are nonetheless trying to convey something that they believe or have perceived. This, as we’ve argued, is the wrong metaphor. The machines are not trying to communicate something they believe or perceive. Their inaccuracy is not due to misperception or hallucination. As we have pointed out, they are not trying to convey information at all. They are bullsh*tting.

…

… the inaccuracies show that it is bullsh*tting, even when it’s right. Calling these inaccuracies ‘bullsh*t’ rather than ‘hallucinations’ isn’t just more accurate (as we’ve argued); it’s good science and technology communication in an area that sorely needs it.

Even the finance bros seem to have caught on: Goldman Sachs is now warning that the

returns from AI investment are… well, not what people think. [11]

Even the finance bros seem to have caught on: Goldman Sachs is now warning that the

returns from AI investment are… well, not what people think. [11]

Apparently we’ve spent about a billion, and are on track to spend a trillion, for systems that are persuasive, but lie like Donald Trump. (My comparison, not theirs. But it seems apt, no?)

Worst of all, we’re sacrificing our power grids, our fresh water capacity, the careers

of creative freelancers to feed these monsters, and the factual integrity of our

scientific literature in return for nothing useful:

The Weekend Conclusion

Look, it’s not hard: these things are unfit for any purpose.

Even if your purpose is just to generate a fantasy, do you really want your fantasies shaped by something scraped off the bottom of the Internet? Wouldn’t it be healthier to train your own imagination?

This whole affair is an instance of Brandolini’s Law:

The amount of energy needed to refute bullsh*t is an order of magnitude bigger than that needed to produce it.

They’ll keep doing it until either they win, or as John Rogers 3rd Rule of Crime

suggests above, some Rich White Guy goes to jail. We should all hope for the latter.

They’ll keep doing it until either they win, or as John Rogers 3rd Rule of Crime

suggests above, some Rich White Guy goes to jail. We should all hope for the latter.

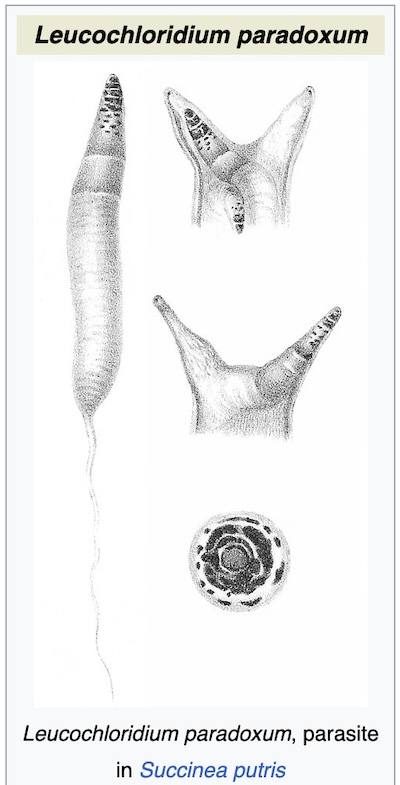

Via author Charlie Stross, who is fascinated by parasites to put in his novels, these things are the intellectual equivalent of Leucocloridium paradoxum. [12] That’s a particularly disgusting worm that infests the brain and eye stalks of snails, changing the snail to suicidal behavior leading to predation by birds, so the worm can progress to its next host. You don’t want it. Really.

Here at Château Weekend, we’re somewhat alarmed at this. As you can see in the

picture, both the Weekend Publisher and the Assistant Weekend Publisher are mildly

alarmed, disturbing their natural cat aplomb.

Here at Château Weekend, we’re somewhat alarmed at this. As you can see in the

picture, both the Weekend Publisher and the Assistant Weekend Publisher are mildly

alarmed, disturbing their natural cat aplomb.

We’re only mildly alarmed, because we have boundless faith in the ability of corporate managers to screw it up into a state of relative harmlessness. The radioactivity inside the blast radius may destroy their company, but probably not humanity.

At least, until some idiot makes an ASI. For preventing that, you need to understand Eliezer Yudkowsky. (It’s hard for your humble Weekend Editor to understand why so many people so stubbornly resist understanding that.)

Of course now, more than ever: Ceterum censeo, Trump incarcerandam esse.

Notes & References

1: Weekend Editor, “On ChatGPT and Its Ilk”, Some Weekend Reading blog, 2023-Feb-15. ↩

2: Weekend Editor, “ChatGPT and Francophone Misbranding”, Some Weekend Reading blog, 2023-Mar-25. ↩

3: Weekend Editor, “On Using ChatGPT for Peer Review”, Some Weekend Reading blog, 2023-Jun-14. ↩

4: Weekend Editor, “On Detecting Academic Papers with AI-Written Content”, Some Weekend Reading blog, 2024-Mar-27. ↩

5: V Tangerman, “Tim Cook Admits Apple May Never Be Able to Make Its AI Stop Lying”, Futurism: The Byte, 2024-Jun-13. NB: This includes some quotes from a WaPo article, but it’s paywalled and the Wayback Machine won’t cough it up, for no particularly obvious reason. ↩

6: DP Williams, “Bias Optimizers”, American Scientist 111:4, p. 204, 2023-Jul/Aug. DOI: 10.1511/2023.111.4.204. Alas, sadly paywalled. ↩

7: M Wong, “Google Is Turning Into a Libel Machine”, The Atlantic, 2024-Jun-21. A non-paywalled version at the Wayback Machine is available. ↩

8: T Hunter, “Using AI to spot edible mushrooms could kill you”, Washington Post, 2024-Mar-18. A non-paywalled version at the Wayback Machine is available. ↩

9: Nik Suresh (a.k.a. “Ludic”), “I Will F***ing Piledrive You If You Mention AI Again”, Lucidity blog, 2024-Jun-19. ↩

10: MT Hicks, J Humphries, and J Slater, “ChatGPT is bullsh*t”, Ethics & Info Tech 26:38, 2024-Jun-08. DOI: 10.1007/s10676-024-09775-5. ↩

11: K Balevic, “Goldman Sachs says the return on investment for AI might be disappointing”, Business Insider, 2024-Jun-29. ↩

12: Yes, this means I’ve just compared LLM AIs to a parasitic worm; that’s as intended. Yes, it also means I’ve compared humans to snails; that’s not as intended, it’s just that every analogy has its breaking point.

Also: yes, I also know the difference between metonym and synedoche; no, I will not get twisted into knots over it. ↩

Gestae Commentaria

Comments for this post are closed pending repair of the comment system, but the Email/Twitter/Mastodon icons at page-top always work.