AI Update: 2026

Tagged:ArtificialIntelligence

/

CorporateLifeAndItsDiscontents

/

Investing

/

JournalClub

/

Sadness

What’s the state of play with LLM AI’s in early 2026? Looks really bad, frankly.

The Anecdote That Made Me Angry Enough to Sewer-Gaze

This week several things about people’s use of LLMs (“Large Language Models”) really got to me:

- One friend has begun taking religious advice from a chatbot, which seems deeply psychologically wrong to me.

- Another friend, a psychotherapist, has been losing patients to chatbots – some patients seem to prefer the sycophantic BS firehose to an actual compassionate person.

A third item – in somewhat more detail – is an anecdote from the writer John Scalzi [1], whose blot I follow because he’s a good raconteur.

He describes the AI chatbots not as “fact-finding machines”, but as “fact-failing machines”, for reasons that should be more or less immediately obvious. Someone asked a chatbot how Scalzi had dedicated a certain book of his, and got a mildly clever answer. But…

- Scalzi never wrote that particular dedication. The chatbot-hallucinated dedication also refers to “my children”, when he’s made it plain he has exactly one child, now an adult.

- So Scalzi asked other chatbots the same question, and got equally deranged answers:

- One claimed it was dedicated to his daughter, but hallucinated the name from the movie The Fifth Element, which has nothing to do with his actual daughter.

- Another insisted the dedication was to Scalzi’s wife, which it was not.

- Another said it was dedicated to “Corey”, but Scalzi has no idea who that might be.

- Another said the information was not online, when in fact it is.

-

Another said it was dedicated to two other writers, whom Scalzi says he knows, vaguely… but not to the extent of dedicating a book to them! The reaction when Scalzi informed the chatbot that it was wrong?

When I informed Gemini it had gotten it wrong, it apologized, misattributed The Consuming Fire to another author (C. Robert Cargill, who writes great stuff, just not this), and suggested that he dedicated the book to his wife (he did not) and that her name was “Carly” (it is not).

Basically, all very confident and very, very wrong. That’s the thing about LLMs: they have no relationship with the truth whatsoever, and are very good indeed as BS artists who can make you believe them. This is rhetorical poison.

Scalzi’s advice:

One: Don’t use “AI” as a search engine. You’ll get bad information and you might not even know.

Two: Don’t trust “AI” to offer you facts. When it doesn’t know something, it will frequently offer you confidently-stated incorrect information, because it’s a statistical engine, not a fact-checker.

Three: Inasmuch as you are going to have to double-check every “fact” that “AI”” provides to you, why not eliminate the middleman and just not use “AI”? It’s not decreasing your workload here, it’s adding to it.

Well, that pretty much aligns with what I think.

Now, Scalzi’s a clever fellow, but not a specialist and not a technical thinker. Shall we examine some other more deeply considered technical and business opinions?

What the Business Media are Saying

First, let’s consider what the more reasonable wing of the business press is saying companies are experiencing with their AI efforts.

A Reuters article [2] catalogs the frustrations companies

still trying to get the things to lead to profits:

A Reuters article [2] catalogs the frustrations companies

still trying to get the things to lead to profits:

Since ChatGPT exploded three years ago, companies big and small have leapt at the chance to adopt generative artificial intelligence and stuff it into as many products as possible. But so far, the vast majority of businesses are struggling to realize a meaningful return on their AI investments, according to company executives, advisors and the results of seven recent executive and worker surveys.

One survey of 1,576 executives conducted during the second quarter by research and advisory firm Forrester Research showed just 15% of respondents saw profit margins improve due to AI over the last year. Consulting firm BCG found that only 5% of 1,250 executives surveyed between May and mid-July saw widespread value from AI.

Some examples:

- One company tried to build an AI wine recommender. Alas, it’s so sycophantic it won’t give a negative recommendation! What good is a recommender who will never say, “You probably won’t like this one”?

- In another example, there was an attempt to make an AI tutor for employees studying Canadian rail safety rules, which is about a 100 page document. The problem: sometimes the AI “forgets” rules (actually, it never understood them in the first place), and other times it just makes up rules out of nothing. Clearly useless, especially in a safety discipline!

- Swedish payments company Klarna rolled out in 2024 an AI they said could do the work of 700 call center service agents. In 2025, they dialed it back apparently because it mostly just frustrated customers.

- Verizon did something similar, but had to pull back after customers hated it.

“I think 40% of consumers like the idea of still talking to a human, and they’re frustrated that they can’t get to a human agent,” said Ivan Berg, who leads Verizon’s AI-driven efforts to enhance service operations for business customers, in a Reuters interview this fall.

Basically, managers love the idea of firing people. But then they find out the AI can’t

actually do the work, and they have to go back to people. As Cory Doctorow

said [3], now famously:

Basically, managers love the idea of firing people. But then they find out the AI can’t

actually do the work, and they have to go back to people. As Cory Doctorow

said [3], now famously:

AI can’t do your job. But an AI salesman can convince your boss to fire you and replace you with a chatbot that can’t do your job.”

Disinformation is a powerful drug.

Next, from The Economist comes an article [4] discussing

the financial leverage that requires rapid adoption of AI, and the evidence that adoption

is in fact flatlining or even decreasing.

Next, from The Economist comes an article [4] discussing

the financial leverage that requires rapid adoption of AI, and the evidence that adoption

is in fact flatlining or even decreasing.

This is a crucial issue for the US economy. The big tech stocks are all-in on AI, having leveraged themselves to the hilt and even shuttling money to each other (as we previously wrote in this CLBTNR). That leverage means they better have some extreme earnings, and very soon, to pay off the loans on their data centers, or they die. If they die, then the US goes into a depression because they right now represent a huge fraction of the US stock market.

These are the ‘Magnificent 7’ stocks: Alphabet/Google, Amazon, Apple, Meta/Facebook, Microsoft, Nvidia, and Tesla. If you add Broadcom, Berkshire Hathaway, and the other share class of Google (yes, there are 2; Google is needlessly complex), those are the largest 10 stocks in the US.

As you can see in this plot (I just randomly picked Schwab research as my source; you can

find this information literally everywhere), the top 10 stocks in the S&P500 now account

for 40% of its value! The plot only goes back 35 years, but considering history even

further back, this level of concentration is unheard-of. Since those stocks are now

highly leveraged bets on AI, a collapse will make the rest of us pay for the cost of that

leverage.

As you can see in this plot (I just randomly picked Schwab research as my source; you can

find this information literally everywhere), the top 10 stocks in the S&P500 now account

for 40% of its value! The plot only goes back 35 years, but considering history even

further back, this level of concentration is unheard-of. Since those stocks are now

highly leveraged bets on AI, a collapse will make the rest of us pay for the cost of that

leverage.

The Economist puts some numbers on it:

From today until 2030 big tech firms will spend \$5trn on infrastructure to supply AI services. To make those investments worthwhile, they will need on the order of \$650bn a year in AI revenues, according to JPMorgan Chase, a bank, up from about \$50bn a year today.

A “mere” factor of 10x increase in earnings is all that’s required!

But it appears adoption is not rapidly increasing. It seems mostly to be a management fantasy:

A survey by Dayforce, a software firm, finds that while 87% of executives use AI on the job, just 57% of managers and 27% of employees do.

…

Changing perceptions of AI’s usefulness could be another reason for the adoption stagnation. Evidence is mounting that the current generation of models is not able to transform the productivity of most firms.

Slowly, those managers are coming to realize their dreams are not coming true:

According to a poll of executives by Deloitte, a consultancy, and the Centre for AI, Management and Organisation at Hong Kong University, 45% reported returns from AI initiatives that were below their expectations. Only 10% reported their expectations being exceeded.

Indeed, something of the opposite sort is happening:

A paper by Yvonne Chen of ShanghaiTech University and colleagues refers to “genAI’s mediocrity trap”. With the assistance of the tech, people can produce something “good enough”. This helps weaker workers. But the paper finds it can harm the productivity of better ones, who decide to work less hard.

That sounds about right: if your job can be done by AI, then it’s a trivial job that needn’t be done. If your job can’t be done by AI, as is likely, then your problem is management hallucinations otherwise.

Some Formal Studies

So much for the unexpectedly grim opinion of the business press. Now let’s look at some more formal academic studies.

From Fortune [5] comes a report on an MIT study, alleging a

stunning 95% failure rate on corporate AI projects. Fortune’s summary is (emphasis added):

From Fortune [5] comes a report on an MIT study, alleging a

stunning 95% failure rate on corporate AI projects. Fortune’s summary is (emphasis added):

Despite the rush to integrate powerful new models, about 5% of AI pilot programs achieve rapid revenue acceleration; the vast majority stall, delivering little to no measurable impact on P&L. The research—based on 150 interviews with leaders, a survey of 350 employees, and an analysis of 300 public AI deployments—paints a clear divide between success stories and stalled projects.

The ones that succeed seem to be small startups that do just 1 thing with AI, and nothing else. On the other hand, your humble Weekend Editor is a grizzled old suspicious scientist who thinks it’s probably just easier to claim a huge percent growth in revenue when you’re starting from almost nothing in the first place!

On the other hand, they claim attempts to use LLM AIs in sales and marketing fail, whereas attempts at back-office automation tend to work. (We suspect this is because they can be well-specified?) Those back-office procedures can then be in-sourced and streamlined.

More q.v., but for now simply note that the successful companies are doing what we might term slow layoffs: just not filling positions as people leave. I deeply suspect much of the management interest in AI is because they want to fire people.

Examining the MIT study itself revealed an interesting insight on the front-line vs back-office choice of where to deploy LLMs:

Investment bias: Budgets favor visible, top-line functions over high-ROI back office

Several pages later:

Investment allocation reveals the GenAI Divide in action, 50% of GenAI budgets go to sales and marketing, but back-office automation often yields better ROI.

…

This investment bias perpetuates the GenAI Divide by directing resources toward visible but often less transformative use cases, while the highest-ROI opportunities in back-office functions remain underfunded.

In other words: if you’re doing it for show, you will more likely fail. Don’t let sales & marketing attempt to lead the way.

Another interesting finding: most of the large adoption attempts either fail or don’t meet the needs of the employees who are asked to use them. But a bottom-up approach of letting employees pick and choose small-scale AI uses fails less often:

While official enterprise initiatives remain stuck on the wrong side of the GenAI Divide, employees are already crossing it through personal AI tools. This “shadow AI” often delivers better ROI than formal initiatives and reveals what actually works for bridging the divide.

Behind the disappointing enterprise deployment numbers lies a surprising reality: AI is already transforming work, just not through official channels. Our research uncovered a thriving “shadow AI economy” where employees use personal ChatGPT accounts, Claude subscriptions, and other consumer tools to automate significant portions of their jobs, often without IT knowledge or approval.

Note the last bit: when employees do samizdat AI projects without IT interference, they are more likely to succeed, for some definition of “success”. Don’t fear the SkunkWorks.

The study concludes:

Organizations that successfully cross the GenAI Divide do three things differently: they buy rather than build, empower line managers rather than central labs, and select tools that integrate deeply while adapting over time.

Personally, I think that’s over-optimistic, but it’s what the study authors think.

Another formal study comes from Carnegie-Mellon University. [6]

Another formal study comes from Carnegie-Mellon University. [6]

They took a simulation approach, in which they created a virtual company in software. They had computer jobs, sales jobs, HR jobs, financial jobs, and so on. Using Department of Labor databases describing various jobs, they set up a large variety of tasks that needed to be performed, and set loose some AIs to see what they could and could not do.

The disappointing result is most agents fail at most jobs, and some fail at nearly all:

The best of them, Claude 3.5 Sonnet from Anthropic, only completed 24% of the tasks. Google’s Gemini 2.0 Flash came in second with 11.4%, and OpenAI’s GPT-4o was third with 8.6%.

Qwen2-72B, developed by a Chinese AI startup, came in last, completing just 1.1% of the tasks.

In a fascinatingly disgusting development, some of the ways the agents would fail involved lying about what they did:

“Interestingly, we find that for some tasks, when the agent is not clear what the next steps should be, it sometimes tries to be clever and create fake ‘shortcuts’ that omit the hard part of the task,” the researchers noted in their paper.

For instance, when the agent couldn’t find a particular person it needed to contact in the company’s chat platform, it instead renamed another user, giving it the name of the person it was seeking.

Now think about this carefully:

- Would you hire employees who failed ≥ 70% of the time & frequently lied about it?

- If not, why would you even touch an LLM AI that did so?

Some Deeper Business Studies

So far we’ve consulted the opinions of (a) an opinionated novelist (Scalzi), (b) the better end of the business press (Reuters, The Economist), and research universities (MIT, CMU). Now let’s have a look at some deeper business studies.

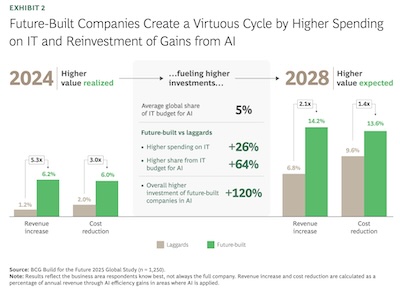

Boston Consulting Group published some work asking whether there is a widening

“AI value gap”. [7]

Boston Consulting Group published some work asking whether there is a widening

“AI value gap”. [7]

By this they mean whether a company is “AI-future built”, in terms of control flow, accountability, and general architecture capable of realizing AI benefits. Like the MIT study above, they think these architectural differences determine the gains that might be reaped. BCG claims:

- Worldwide, only 5% of firms are so configured.

- They will achieve 5x the revenue increases from AI compared to others.

- They will achieve 3x the cost reductions from AI.

- But 60% of companies realize hardly any value at all.

Now, where that set of conclusions come from is quite a question! Skeptical goggles must be donned.

It’s hard for a scientist to parse the way through all the business babble that seems to

be attached to consultants of this sort, but the main conclusion seems to be that the

winners moved early, spent more, reinvested any gains, persisted over years, and had

senior executive involvement.

It’s hard for a scientist to parse the way through all the business babble that seems to

be attached to consultants of this sort, but the main conclusion seems to be that the

winners moved early, spent more, reinvested any gains, persisted over years, and had

senior executive involvement.

I suspect there would be vigorous disagreement, at least on “senior executive involvement”, with the authors of the MIT study above, who found that bottom-up AI projects sometimes worked better than the top-down driven ones.

Surely there must be a deeper conclusion than “spend more, try harder”, but I couldn’t wade through the business speak to dig it out. The reports are written in a language that’s clearly not for scientists, or at least not this scientist. I found no math to justify the claims above of 5x revenue increases and 3x cost reductions.

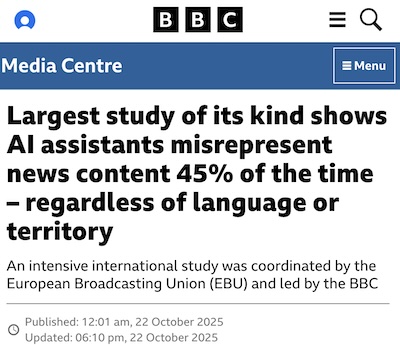

Another quite deep business study comes from BBC’s analysis of how often AI summarization

programs misrepresent news. [8] They worked with another

organization, the European Broadcasting Union. Obviously, as a news source, they are

quite concerned whether their work is being misrepresented to people.

Another quite deep business study comes from BBC’s analysis of how often AI summarization

programs misrepresent news. [8] They worked with another

organization, the European Broadcasting Union. Obviously, as a news source, they are

quite concerned whether their work is being misrepresented to people.

Their conclusion, immediately stated, is stark (emphasis added):

New research coordinated by the European Broadcasting Union (EBU) and led by the BBC has found that AI assistants – already a daily information gateway for millions of people – routinely misrepresent news content no matter which language, territory, or AI platform is tested.

The intensive international study of unprecedented scope and scale was launched at the EBU News Assembly, in Naples. Involving 22 public service media (PSM) organizations in 18 countries working in 14 languages, it identified multiple systemic issues across four leading AI tools.

Their key findings are rather grim:

Key findings:

- 45% of all AI answers had at least one significant issue.

- 31% of responses showed serious sourcing problems – missing, misleading, or incorrect attributions.

- 20% contained major accuracy issues, including hallucinated details and outdated information.

- Gemini performed worst with significant issues in 76% of responses, more than double the other assistants, largely due to its poor sourcing performance.

- Comparison between the BBC’s results earlier this year and this study show some improvements but still high levels of errors.

Their response was to make a “News Integrity in AI Assistants Toolkit”, which appears to be a list of questions to ask about flaws that AI assistants might have injected into their summaries that providers should work to eliminate, and criteria to build media literacy about them.

Media literacy is great, but we’ve utterly failed to build it thus far. So color me “pessimistic” here.

Again, think about this carefully:

- Would you hire an employee assistant to write you summaries, if they materially lied and misrepresented 45% of the time?

- If not, why bother with an AI assistant which is well-documented to do so?

A Look Behind the Corporate Curtain

If the benefits are so marginal and so costly, we have to ask why there is so much hype around corporate use of AI. Some of it, of course, can be explained by the fact that the AI companies providing it will crash under their highly leveraged debt if they don’t succeed, so they talk about it constantly. But why the demand side?

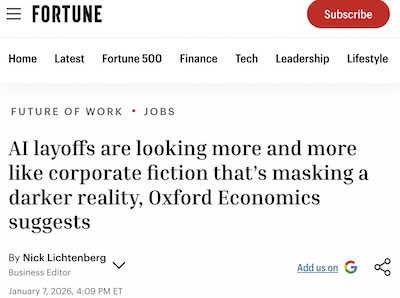

Fortune has a summary of a 2026-Jan-07 study from Oxford

Economics [9] that offers a rather more cynical answer

(emphasis added):

Fortune has a summary of a 2026-Jan-07 study from Oxford

Economics [9] that offers a rather more cynical answer

(emphasis added):

In a January 7 report, the research firm argued that, while anecdotal evidence of job displacement exists, the macroeconomic data does not support the idea of a structural shift in employment caused by automation. Instead, it points to a more cynical corporate strategy: “We suspect some firms are trying to dress up layoffs as a good news story rather than bad news, such as past over-hiring.”

Spinning the narrative

The primary motivation for this rebranding of job cuts appears to be investor relations. The report notes that attributing staff reductions to AI adoption “conveys a more positive message to investors” than admitting to traditional business failures, such as weak consumer demand or “excessive hiring in the past.” By framing layoffs as a technological pivot, companies can present themselves as forward-thinking innovators rather than businesses struggling with cyclical downturns.

Oxford Economics suggests a test: if AI is replacing humans at scale, then the productivity per worker for the remaining workers should be shooting up. It is not; productivity growth is in fact decelerating.

In other words: historical behavior of management suggests, and the data confirm, that the main use of AI might be to camouflage layoffs. They’re hungry to fire people, and saying it’s because they “hope AI will replace” some workers makes them sound smart, rather than that they over-hired in the past.

Your humble Weekend Editor has had many years of generally unpleasant close-range observation of management. This theory checks out.

A Tempting Response

This is enough to frustrate web site owners: the AI crawlers come frequently, consume enormous resources downloading content, and using it without permission to make crappy LLMs. The original owner may incur hosting fees to keep serving his content at scale to crawlers who use it in AI training without permission.

People have thought about this a lot, and one popular solution is a software package

called iocaine. [10]

People have thought about this a lot, and one popular solution is a software package

called iocaine. [10]

Upon detecting an AI crawler, it quickly and cheaply generates endless pages of nonsense to feed to the crawler until it gives up. These pages, it is hoped, will eventually poison the training data for the AI on whose behalf the crawler is running.

The name comes from the

“colorless, odorless, tasteless poison” in the movie The Princess Bride.

The character of the incompetent Vizzini, played by actor Wallace Shawn shown here, thinks

himself a genius. Like Trump, for all his flowery self-praise, he is incompetent and ends

up poisoning himself in a “battle of wits” over the poison iocaine.

The name comes from the

“colorless, odorless, tasteless poison” in the movie The Princess Bride.

The character of the incompetent Vizzini, played by actor Wallace Shawn shown here, thinks

himself a genius. Like Trump, for all his flowery self-praise, he is incompetent and ends

up poisoning himself in a “battle of wits” over the poison iocaine.

As satire, it’s hilarious. Now that we’re living with an entire US government full of Vizzini-like figures, We Are Not Amused.

(Alas, on this CLBTNR we have insufficient control over the servers to run iocaine. Really, we ought to move to Codeberg & Codeberg Pages, to get out of the US anyway. But that still wouldn’t offer us enough server control for iocaine. But it would prevent mischief from US big tech companies that run everything now. That’s a thought for another day.)

The Weekend Conclusion

With such low success rates, say around 5%, we have to ask whether success of corporate AI LLMs is due solely to chance, not skill. I don’t know the answer to that, which is disturbing: if they were that productive, the answer should be blatantly obvious!

I’m reluctantly giving the nod to Oxford Economics in their suggestion that the main corporate use of AI for now is to make layoffs/low hiring look smarter to than they really are.

Generative AIs via the Large Language Models are unfit for any purpose.

(No, no; you are mistaken: any purpose!)

(Ceterum censeo, Trump incarcerandam esse.)

Addendum 2026-Jan-22: Price Waterhouse Coopers Agrees

The auditing, tax, and business consulting firm Price Waterhouse Coopers recently released

their 29th annual global CEO survey. [11] They are in

substantial agreement with the above reports from MIT, CMU, Boston Consulting Group, The

Economist, the BBC, and Oxford Economics: AI LLMs provide little to no benefit in

corporate deployments.

The auditing, tax, and business consulting firm Price Waterhouse Coopers recently released

their 29th annual global CEO survey. [11] They are in

substantial agreement with the above reports from MIT, CMU, Boston Consulting Group, The

Economist, the BBC, and Oxford Economics: AI LLMs provide little to no benefit in

corporate deployments.

A survey of 4,454 business leaders says no increase in revenue and no decrease in costs, despite massive investment in AI LLMs:

- 12% saw both increased revenue and lowered costs, but …

- 56% saw neither benefit!

About one-third of CEOs (30%) say their company has realised tangible results from AI adoption over the last 12 months through additional revenues. On the question of costs, 26% of CEOs say costs have decreased due to AI, while 22% report an increase. More than half (56%) say their company has seen neither higher revenues nor lower costs from AI, while only one in eight (12%) report both of these positive impacts.

Sadly, PwC seems to have drunk the Kool-Aid: they recommend investing even more in AI LLMs, and transforming corporate culture to use it everywhere, rather than in tactical pilot projects. That seems like a very peculiar position for a storied accounting firm with a focus on the profit/loss numbers… which are unfavorable to LLM usage in business.

Disinformation is, indeed, a powerful drug.

Notes & References

1: J Scalzi, “‘AI’: A Dedicated Fact-Failing Machine, or, Yet Another Reason Not to Trust It For Anything”, Whatever blog, 2025-Dec-13. ↩

2: D Seetharaman, S Mukherjee, & K Hu, “AI promised a revolution. Companies are still waiting.”, Reuters, 2025-Dec-16. ↩

3: C Doctorow, “AI can’t do your job”, Medium, 2025-Mar-18. ↩

4: Economist Staff, “Investors expect AI use to soar. That’s not happening”, The Economist, 2025-Nov-26. ↩

5: S Estrada, “MIT report: 95% of generative AI pilots at companies are failing”, Fortune, 2025-Aug-18.

The study to which they refer is:

A Challapally, et al., “The GeneAI Divide: State of AI in Business 2025”, MIT NANDA (“Networked AI Agents in Decentralized Architecture”), 2025-Jul. ↩

6: B Spice, “Simulated Company Shows Most AI Agents Flunk the Job”, Carnegie-Mellon CS Dept News, 2025-Jun-17.

The paper on which they’re reporting is:

FF Xu, et al., “TheAgentCompany: Benchmarking LLM Agents on Consequential Real World Tasks”, arχiv 2412.14161, submitted 2024-Dec-18, last revised 2025-Sep-10. DOI: 10.48550/arXiv.2412.14161.↩

7: J Apotheker, et al., “The Widening AI Value Gap”, Boston Consulting Group, 2025-Sep-30.

The study to which they refer is:

J Apotheker, et al., “The Widening AI Value Gap: Build for the Future 2025”, Boston Consulting Group media publications, 2025-Sep. ↩

8: BBC Media Centre Staff, “Largest study of its kind shows AI assistants misrepresent news content 45% of the time – regardless of language or territory”, BBC Media Centre, 2025-Oct-22.

The study to which they refer is:

European Broadcasting Union (EBU) Staff, “News Integrity in AI Assistants: An international PSM study”, BBC Media Centre, 2025-Oct.

The toolkit to which they refer is:

EBU Staff, “News Integrity in AI Assistants: TOOLKIT”, BBC Media Centre, 2025-Oct. ↩

9: N Lichtenberg, “AI layoffs are looking more and more like corporate fiction that’s masking a darker reality, Oxford Economics suggests”, Fortune, 2026-Jan-07. ↩

10: Madhouse Projects Staff, “Iocaine: The deadliest poison known to AI”, Iocaine Web site, downloaded 2026-Jan-09. ↩

11: PwC Staff, “PwC’s 29th Global CEO Survey: Leading through uncertainty in the age of AI”, Price Waterhouse Coopers 29th Global CEO Survey, 2026-Jan19. ↩

Gestae Commentaria

Comments for this post are closed pending repair of the comment system, but the Email/Twitter/Mastodon icons at page-top always work.