A Few Wise Words on LLM AIs from Cory Doctorow

Tagged:ArtificialIntelligence

/

CorporateLifeAndItsDiscontents

/

Investing

/

NotableAndQuotable

/

Politics

/

Statistics

Cory Doctorow has a few (unfortunately) wise bits of advice on the nearing LLM AI bubble collapse.

Doctorow on the Inevitable

Cory Doctorow is a modern Canadian/British

fiction writer and tech journalist of some note.

Cory Doctorow is a modern Canadian/British

fiction writer and tech journalist of some note.

In fact, he is of such note that not only is there a Wikipedia page on him (link above), he is the subject of a joke in Randall Munroe’s XKCD #239. He was said to blog from a high-altitude balloon, while wearing a red cape and goggles. Showing off his famous ability to take a joke, he then showed up a few years later at a conference appearance with Munroe, wearing a red cape and goggles, to Munroe’s evident delight.

You know you’re part of the modern internet intellectual elite when XKCD makes fun of you, and you get to make fun back.

Doctorow’s opinions on the current LLM version of AI aligns more or less with ours, here

at Château Weekend: BS firehoses and bowls of warm sewage & broken glass, best

approached not at all, or at least with appropriate safety equipment.

Doctorow’s opinions on the current LLM version of AI aligns more or less with ours, here

at Château Weekend: BS firehoses and bowls of warm sewage & broken glass, best

approached not at all, or at least with appropriate safety equipment.

While I’m not one of his regular readers, his latest summary of the causes of the AI bubble and its imminent collapse [1] caught my eye.

First, he has an interesting insight as to why all the LLM AI development seems to be coming from the likes of Google, Microsoft, and “startup” that soak in billions of investment (emphasis added):

… the AI bubble is driven by monopolists who’ve conquered their markets and have no more growth potential, who are desperate to convince investors that they can continue to grow by moving into some other sector, e.g. “pivot to video,” crypto, blockchain, NFTs, AI, and now “super-intelligence.” Further: the topline growth that AI companies are selling comes from replacing most workers with AI, and re-tasking the surviving workers as AI babysitters (“humans in the loop”), which won’t work.

We’ve allowed so much concentration of capital in oligarchs and in about 7 giant tech companies that their growth alone determines our economic future. They are desperate to “pivot to” something that will grow. (Whenever I heard management say “pivot to X”, for whatever value of X, I was always disappointed that they never admitted they were wrong about what they demanded we do instead of X in the past.) In this case, the “pivot to AI” has management drooling so hard because they fantasize they can jut fire most of those pesky employees, with their annoying demands for pay, insurance, time off, and retirement. (Not to mention diversity, equity, and inclusion.)

This is exactly the argument made in the Industrial Revolution for laying off

cloth-makers and letting them starve. Brian Merchant’s excellent history, Blood in the

Machine [2], illustrates this in great detail with

comparisons to modern oligarchs and monopolies.

This is exactly the argument made in the Industrial Revolution for laying off

cloth-makers and letting them starve. Brian Merchant’s excellent history, Blood in the

Machine [2], illustrates this in great detail with

comparisons to modern oligarchs and monopolies.

Merchant quotes the historian Frank Peel, capturing the mood of the elite of 1811 (and of today!) in their contempt for workers thusly (p. 44), with the suggestion of the ‘rational’ economic alternative for workers:

“Had they been better instructed, they would have known that it was their duty to lie down in the nearest ditch and die.”

In 1811 at the height of the cloth-making revolution in England, oligarch, aristocrats, and monopolies were the rule of the day. This is the inevitable way in which those things warp human minds. Like the fictional ring of Sauron, this sort of economic power tempts with great fantasies of fascist power, but in the end morally rots the holder to destruction.

That is, of course, a dark and hopeless fantasy on the part of the wealthy:

Finally: AI cannot do your job, but an AI salesman can 100% convince your boss to fire you and replace you with an AI that can’t do your job, and when the bubble bursts, the money-hemorrhaging “foundation models” will be shut off and we’ll lose the AI that can’t do your job, and you will be long gone, retrained or retired or “discouraged” and out of the labor market, and no one will do your job. AI is the asbestos we are shoveling into the walls of our society and our descendants will be digging it out for generations …

The comparison to asbestos is apt: we are polluting much of our information resources with AI slop that is either trivial or outright false, and making it harder and harder to tell what is AI slop and what is not. Having had to have asbestos removed from an older house, this hits home for me as a near-exact truth.

The financial arrangements are equally inane, in a way that utterly boggles my mind as to why it is legal, or survives even a cursory audit. Microsoft and OpenAI are clearly creating a circular flow of money, pretending it’s an investment, and letting OpenAI just set fire to it all:

That barely scratches the surface of the funny accounting in the AI bubble. Microsoft “invests” in Openai by giving the company free access to its servers. Openai reports this as a ten billion dollar investment, then redeems these “tokens” at Microsoft’s data-centers. Microsoft then books this as ten billion in revenue.

Nvidia does more or less the same thing with data centers, funding them with money that is paid back to them to purchase GPUs:

That’s par for the course in AI, where it’s normal for Nvidia to “invest” tens of billions in a data-center company, which then spends that investment buying Nvidia chips. It’s the same chunk of money is being energetically passed back and forth between these closely related companies, all of which claim it as investment, as an asset, or as revenue (or all three).

I thought this sort of circular shell-company thing was illegal? Apparently not, or at least not in these modern days when “illegal” is meaningless if it inconveniences oligarchs, monopolies, or friends of Trump.

Writing in Locus (Doctorow sometimes writes SF, so that’s a natural venue for him), he predicts how people will be forced into essentially bad jobs as mere assistants to LLMs that are nearly always wrong. [3] He uses some delightful jargon from automation:

- A centaur is when the human is on top, using the AI as an assistant (body of the horse).

- A reverse centaur is when the AI is in charge, forcing the human to fact-check every single thing it does, repetitively.

Summary: Centaur good, reverse centaur very bad.

(I was unable to track down the originator of this term; let me know if you do.)

Since LLMs are highly tuned to sound convincing, even when writing computer code, most of the stuff they write is very, very difficult to fact-check and debug. Assigning a human to wade through the slop looking for plausible but wrong bits is hopeless.

That will quickly collapse into low-paid, low-morale workers who don’t really bother to check on the AI slop: reverse centaurs.

There appears to be little we can do about this. We could just walk away from it, but given the obstinacy of our oligarchs, that is unlikely. The result will probably be an economic collapse, happening at a time when the US government is in a state of fascist collapse and the world careens to war on multiple fronts.

That will be very, very ugly.

As for what one might see next, Doctorow offered this advice to the head of LLM AI strategy at Cornell, for the university’s benefit as an institution:

Plan for a future where you can buy GPUs for ten cents on the dollar, where there’s a buyer’s market for hiring skilled applied statisticians, and where there’s a ton of extremely promising open source models that have barely been optimized and have vast potential for improvement.

AI isn’t going to wake up, become superintelligent and turn you into paperclips – but rich people with AI investor psychosis are almost certainly going to make you much, much poorer.

(Explanatory link about paperclippers added.)

So you might, in the best possible case, be able to pick through the rubble for parts to salvage. As a former “skilled applied statistician”, I’m glad I’m retired now. That’s all assuming you survive the intervening chaos.

So how close are we to a rise in unemployment, recession, or depression?

So how close are we to a rise in unemployment, recession, or depression?

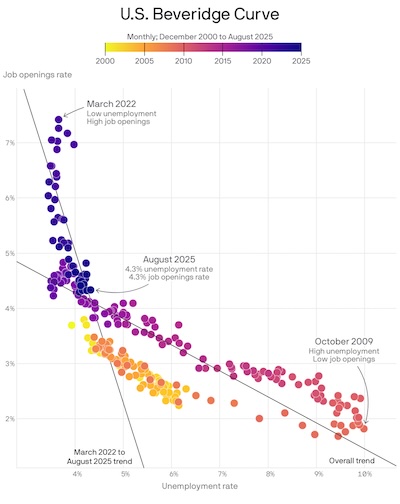

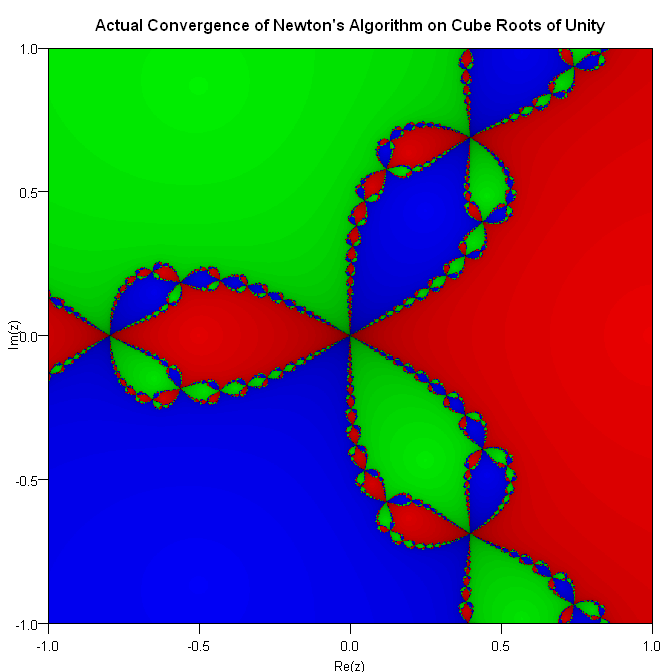

An article on Axios [4] tells us of the almost-alarming state of the Beveridge curve, shown here.

- This curve, popularized by British Economist William Beveridge in the 1940s, plots the

monthly unemployment (presumably the more widely reported U3 in the US, not the more

comprehensive U6?) on the horizontal axis and the number of job openings as a percent of

positions total on the vertical axis.

- When unemployment is low, we expect to see a lot of job openings being advertised (top left). Employers have trouble filling openings, because everybody’s already got a job, and thus openings pile up.

- When unemployment is high, we expect to see very few job openings (bottom right), because people would immediately take those jobs.

What the shape of the curve should be inbetween is the subject of much debate, and some

nonlinear dynamics. It looks to me like a negative power law. But having been warned

by the always amusingly informative Cosma Shalizi about the difficulty of power law fits

[5], I won’t attempt it. (Hint: you can’t “just take

logs” and expect anything to work! Also, many fat-tailed distributions are hard to

distinguish from power laws unless you have huge amounts of data to sample the tails.)

What the shape of the curve should be inbetween is the subject of much debate, and some

nonlinear dynamics. It looks to me like a negative power law. But having been warned

by the always amusingly informative Cosma Shalizi about the difficulty of power law fits

[5], I won’t attempt it. (Hint: you can’t “just take

logs” and expect anything to work! Also, many fat-tailed distributions are hard to

distinguish from power laws unless you have huge amounts of data to sample the tails.)- You want us to be on the upper left portion of the curve (blue/purple points): There, unemployment is low and employers can move their number of job openings up & down without affecting much of anything. Good times for workers, freedom to operate for employers.

- You do not want us to be in the lower right portion of the curve(reddish points): Here, unemployment is high. If employers lower the number of openings even just a little bit, then unemployment skyrockets further up.

As the article points out, the US is now (2025-Aug) at the knee in the curve. Will we go back up to the good part, or down to the right into the bad part?

Nobody knows.

But I really don’t like it.

The Weekend Conclusion

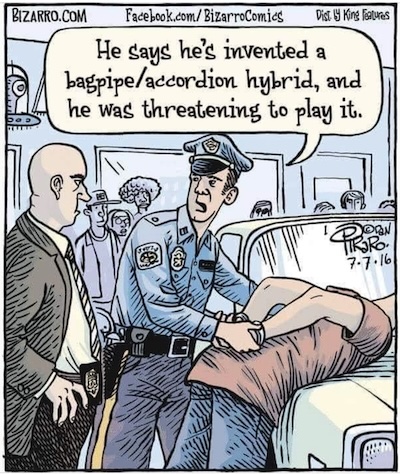

LLM AIs are sort of like the accordion/bagpipe hybrid skewered in this comic by Piraro:

sounds inadvisable, like a generally bad idea that most people won’t like.

LLM AIs are sort of like the accordion/bagpipe hybrid skewered in this comic by Piraro:

sounds inadvisable, like a generally bad idea that most people won’t like.

As it happens, I like bagpipe music, in the right place & time, especially when playing songs of the Second Jacobite Rebellion. (Yes, I am strange; are you only figuring this out now, 415 posts into this blog?) But I do not like the accordion, so the hybrid would still be bad, even for me.

More chillingly is the use of force by police to illustrate the cartoon. We have now altogether too much of that in the US, with Trump deploying national military and state guard forces to Democratic cities for the crime of not loving Trump enough.

Another AI Winter will come soon, and though it might be deserved this time for the LLMs, it will be bad. About 1/3 of the US stock market valuation is tied up in the “Magnificent 7”: tech companies that are, at this point, pretty much a bet on LLMs succeeding… somehow. A massive failure there, in these highly correlated companies, will likely take down much of our economy.

Doctorow explains, repeatedly, to plaintive questions from student that there just isn’t much you can do about it other than try to survive. Here’s what we’ve done Chez Weekend:

- We’ve revised our retirement investment portfolio to be less stock-oriented and less US-oriented.

- We’ve got Social Security and Medicare in place and working.

- We’ve recently gotten solar and batteries on our house in case of power grid disruption, and next week will pull the gas furnace in favor of a second heat pump. (More on that later, as we get some winter experience with the solar installation, in particular.)

I will be ecstatic if that turns out to be unnecessary.

(Ceterum censeo, Trump incarcerandam esse.)

Addendum 2025-Nov-17: Reverse Centaur

From Jamie McKelvie on BlueSky comes a perfect drawing of a reverse centaur:

Perfect. No notes.

Notes & References

1: C Doctorow, “Pluralistic: The real (economic) AI apocalypse is nigh (27 Sep 2025)”, Pluralistic blog 2025-Sep-27. ↩

2: B Merchant, “Blood in the Machine: The Origins of The Rebellion Against Big Tech”, Hachette Book Group, 2023. ISBN-13: 9780316487740. ↩

3: C Doctorow, “Commentary: Cory Doctorow: Reverse Centaurs “, Locus, 2025-Sep-01. ↩

4: N Irwin, “The worrying kink in this job openings, unemployment curve”, Axios, 2025-Oct-06. ↩

5: A Clauset, CR Shalizi, and MEJ Newman, “Power-Law Distributions in Empirical Data”, SIAM Review 15:4 (2009). DOI: 10.1137/070710111.

NB: Regrettably paywalled. The earlier pre-print is still available on arχiv.↩

Gestae Commentaria

Comments for this post are closed pending repair of the comment system, but the Email/Twitter/Mastodon icons at page-top always work.